Don’t Trust Your AI: Why Blind Confidence in Surveillance Tech Is Your Biggest Security Risk

AI promises precision, but blind confidence breeds danger. Across North America, enterprises are discovering that the biggest threat to their security isn’t hackers—it’s misplaced faith in the algorithms guarding their doors.

- Introduction

- Quick Summary / Key Takeaways

- Background & Relevance

- What Happens When We Trust AI Too Much?

- False Positives and False Security

- The Compliance Mirage

- Explainable AI and the Human Element

- From Automation to Awareness

- Comparison Table

- Real-World Lessons

- The Ethical Horizon

- Common Questions (FAQ)

- Conclusion & Call to Action

- Security Glossary (2025 Edition)

Introduction

In 2024, the FBI’s Internet Crime Complaint Center reported more than 21,000 incidents tied to compromised IoT and camera systems—a 32% increase year-over-year. Yet in boardrooms from Toronto to Texas, executives still repeat the same mantra: “We’re covered. Our AI does that.”

ArcadianAI was built on the uncomfortable truth that this statement is often false. Blind faith in “AI-powered” surveillance has become the most dangerous form of complacency. Verkada sells confidence wrapped in clean dashboards; Genetec hides complexity behind polished compliance checklists; Eagle Eye and Rhombus promise the cloud will take care of it. But AI cannot save you from context it cannot understand.

The illusion of intelligence is more dangerous than ignorance.

Quick Summary / Key Takeaways

-

Overconfidence in AI is a new corporate vulnerability.

-

“AI-powered” systems often detect motion, not meaning.

-

False positives desensitize teams and mask real threats.

-

Compliance does not equal transparency or ethics.

-

ArcadianAI’s Ranger blends context, correlation, and conscience.

Background & Relevance

The AI surveillance market in North America surpassed USD 16 billion in 2024 (MarketsandMarkets), growing at 14% CAGR. But Gartner warns that by 2026, 40% of AI deployments will fail due to “explainability deficits.” CISA’s 2025 bulletin highlights an overlooked vector: AI decision errors within physical security systems leading to operational and legal risk.

Security leaders are realizing that buying more “AI cameras” doesn’t equal better protection. The real challenge is trust—how to know what the algorithm meant when it raised or ignored an alarm.

What Happens When We Trust AI Too Much?

Humans are wired for automation bias—the tendency to defer to machines. When an AI flag disappears from a Verkada dashboard, managers assume the issue resolved. When Genetec’s analytics trigger fewer alarms, executives celebrate “efficiency.” In truth, both could mean the algorithm simply stopped noticing anomalies.

A 2024 case in Michigan revealed how over-trust led to disaster: an automated license-plate recognition (ALPR) system misread a plate, causing a wrongful arrest. The AI was 97% accurate—but for the victim, the 3% mattered.

Blind confidence converts small errors into systemic failures. The camera sees, the AI interprets, and humans surrender judgment.

ArcadianAI’s founders learned early: intelligence without accountability is negligence. Ranger was designed to restore that missing accountability through layered context and adaptive reasoning.

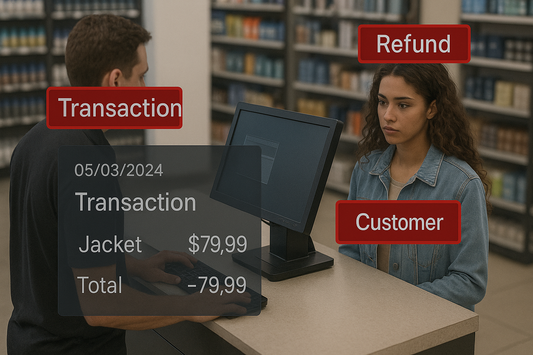

False Positives and False Security

In retail, false alarms exceed 94% of all video alerts (Retail Council of Canada, 2024). Static analytics—motion detection, line crossing, object left/removed—trigger nonstop noise until staff stop caring. Verkada calls it “activity insight.” In practice, it’s white noise that hides real intrusions.

Traditional AI models rely on single-frame logic: detect person, car, or bag. They ignore relationships and timing. A person “lingering” for six seconds might be a thief—or a father waiting for his child.

ArcadianAI’s Ranger eliminates this blindness. By reading context across multiple cameras and time windows, it distinguishes behavioral intent from motion artifacts. Ranger’s algorithms don’t just see what happened; they infer why.

That distinction saves thousands of false dispatches each month for monitoring centers and enterprises across North America.

The Compliance Mirage

Vendors love acronyms—NDAA, GDPR, SOC 2, ISO 27001. Compliance badges make buyers feel safe. But compliance ≠ security.

Genetec markets “NDAA-ready” systems while still depending on third-party analytics modules whose provenance clients never see. Verkada’s 2023 breach exposed 150,000 camera feeds, including schools and hospitals—yet their brochures still highlight “zero-trust cloud architecture.”

ArcadianAI took a different route: transparency by design. Ranger’s pipelines are fully traceable—from the moment video enters the Bridge device to the moment alerts reach the dashboard. Every inference is logged, explainable, and auditable.

Compliance should be a floor, not a ceiling.

Explainable AI and the Human Element

True intelligence invites scrutiny. Explainable AI (XAI) isn’t a buzzword—it’s a survival mechanism. When AI outputs can be inspected, correlated, and challenged, false positives collapse and accountability grows.

Ranger’s architecture was built for explainability:

-

Multi-modal reasoning: merges video, time, and spatial context.

-

Transparent inference logs: every alert carries its “why.”

-

Human-in-the-loop review: allows operators to reinforce or correct detections, feeding adaptive learning.

In contrast, closed systems treat AI like magic—black boxes that demand belief. Security should never require faith.

From Automation to Awareness

2025 marks the shift from passive automation to active awareness. Enterprises no longer need motion detectors—they need decision engines.

ArcadianAI’s Ranger operates as an agentic intelligence layer: planning, interpreting, and adapting in real time. It observes across cameras, learns scene behavior, and predicts anomalies before they escalate.

Verkada sells visibility. ArcadianAI delivers comprehension.

That difference defines the future of physical security in an age where threats evolve faster than firmware updates.

Comparison Table

| Platform | Transparency | Context Awareness | False Positive Handling | Human-in-the-Loop | Deployment Type |

|---|---|---|---|---|---|

| Verkada | Low | Object-level only | Reactive suppression | None | Closed cloud |

| Genetec | Medium | Rule-based | Manual tuning | Optional | Hybrid VMS |

| Eagle Eye Networks | Medium | Basic | Reactive | Limited | Cloud VSaaS |

| Rhombus | Medium | Static AI | Basic filtering | None | Cloud VSaaS |

| ArcadianAI Ranger | High | Adaptive, scene-aware | Proactive suppression | Built-in | Cloud-native + Bridge edge |

Real-World Lessons

1. The California Distribution Center Case

A logistics company deployed 300 “smart cameras” from a popular VSaaS brand. False alarms exceeded 7,000 per week. When a real break-in occurred, alerts were ignored. After switching to ArcadianAI, contextual correlation reduced alerts by 93% while catching the same intruder pattern two nights later.

2. The Toronto Retail Chain

A luxury retailer used Genetec analytics to flag “person loitering.” The AI misclassified cleaning staff nightly. Ranger’s contextual engine recognized uniforms, patterns, and schedules—eliminating 1,200 monthly false dispatches.

3. The Texas School Pilot

Traditional motion analytics triggered panic alerts whenever sports equipment moved. Ranger distinguished authorized activity from anomalies by cross-camera context and time-of-day learning. The result: safety without chaos.

The Ethical Horizon

AI is rewriting the social contract of surveillance. Every detection decision holds moral weight—who gets flagged, who gets ignored, who gets punished by algorithmic error.

ArcadianAI believes trust must be earned through transparency. Ranger’s anonymization and audit modules align with GDPR and emerging Canadian AI regulation (AIDA 2025). Faces can be processed as metadata without permanent identifiers, ensuring situational awareness without voyeurism.

Ethical surveillance is not a luxury—it’s a prerequisite for legitimacy.

Common Questions (FAQ)

Q1: Is AI surveillance completely reliable?

No. Even top systems misread context. Without human oversight and explainability, false positives and blind spots are inevitable.

Q2: Why do vendors hide AI limitations?

Marketing. “AI-powered” sells better than “AI-assisted.” But transparency wins long-term contracts.

Q3: How can I audit my current AI system?

Demand inference logs, retraining data lineage, and scenario testing under real conditions. If a vendor can’t explain an alert, they can’t secure you.

Q4: Does compliance guarantee ethical safety?

No. Compliance proves you met yesterday’s standard. Ethics define tomorrow’s.

Q5: What makes ArcadianAI’s Ranger different?

Ranger understands scenes, not just pixels. It correlates across feeds, learns intent, and provides explainable reasoning behind every alert.

Conclusion & Call to Action

AI was never the enemy. Blind confidence was.

The future of surveillance belongs to those who question their algorithms as much as they question their adversaries. ArcadianAI’s Ranger replaces blind faith with informed trust—transforming every camera from a silent watcher into an intelligent, accountable partner.

See ArcadianAI in Action → Get Demo – ArcadianAI

Security Glossary (2025 Edition)

AI Surveillance Risks — Vulnerabilities arising from over-reliance on automated detection and interpretation without human oversight.

Automation Bias — The human tendency to trust machine output over personal judgment, often leading to oversight failures.

Bridge (ArcadianAI) — A secure edge device connecting existing cameras to the ArcadianAI cloud platform for contextual AI processing.

Context-Aware AI — Systems that evaluate environmental, temporal, and behavioral factors beyond object detection.

Explainable AI (XAI) — Models designed to provide human-readable reasoning for their predictions or alerts.

False Positive — An incorrect alert triggered by AI detecting a threat where none exists.

Human-in-the-Loop — A design approach keeping human oversight within automated systems for accountability.

NDAA Compliance — U.S. federal restrictions ensuring security equipment is free from banned foreign components.

Object Detection — Basic AI vision technique identifying objects but lacking situational comprehension.

Ranger (ArcadianAI) — ArcadianAI’s adaptive intelligence layer providing context-aware surveillance, event correlation, and transparent auditing.

VSaaS (Video Surveillance as a Service) — Cloud-based management and storage of surveillance footage and analytics.

VMS (Video Management System) — Software controlling multiple cameras, traditionally on-premise.

Verkada — U.S.-based closed-cloud surveillance vendor known for simplicity but limited transparency.

Genetec — Canadian hybrid VMS vendor offering rule-based analytics and strong compliance focus.

Eagle Eye Networks — Cloud VSaaS platform emphasizing remote access but limited contextual AI.

Rhombus — Cloud surveillance provider offering device-integrated analytics with basic automation.

Agentic AI — Next-generation artificial intelligence capable of autonomous planning and adaptation beyond static rule sets.

ArcadianAI — A North American cloud-native, camera-agnostic platform delivering context-aware, ethical, and transparent surveillance intelligence.

© 2025 ArcadianAI – Transforming cameras into conscious security intelligence.

Security is like insurance—until you need it, you don’t think about it.

But when something goes wrong? Break-ins, theft, liability claims—suddenly, it’s all you think about.

ArcadianAI upgrades your security to the AI era—no new hardware, no sky-high costs, just smart protection that works.

→ Stop security incidents before they happen

→ Cut security costs without cutting corners

→ Run your business without the worry

Because the best security isn’t reactive—it’s proactive.